What is AI bias, and how does it affect real people? While we're heading towa...

The modern artificial intelligence (AI) era is advancing rapidly and is transforming the digital landscape. AI models, from healthcare to finance, are becoming ubiquitous, especially in customer care and recruitment. According to reports, around 35% of companies globally use AI for their business, and over 50% plan to use it in 2024.

With the increase in the use of AI, several questions have emerged regarding its fairness and bias. Humans are indeed error-prone, but algorithms aren't necessarily unbiased either. Despite all of that, AI is already making important decisions like which advertisements you'll see, how a company responds to your query, whether you'll get admission into your dream institution, and much more.

Unfortunately, not many people understand the true implications of this transformation, which is already underway. That's why it's important to inform readers about AI bias, why it happens, and how it can be controlled. Read on and find all the important details in this guide.

AI bias means the algorithms used to perform various tasks don't do it neutrally. Instead, they lean toward one extreme or the other based on training data, which shows up in their results. Sometimes, the bias results in the propagation of harmful ideas or beliefs, such as racist algorithms promoting race AI stereotypes.

The AI Index Report 2023 published by Stanford University has defined artificial intelligence bias as an algorithm producing outputs that harm specific groups by perpetuating stereotypes. On the other hand, AI is considered fair if it produces reports that don't favor a particular group or don't discriminate against another group.

AI bias can show itself in various manners, resulting in small and large-scale errors. For instance, a joint study by UNESCO, the OECD and the Inter-American Development Bank showed that AI was discriminating against women candidates, perpetuating the long-standing bias against them in workspaces.

Similarly, AI-powered facial recognition technologies have a higher chance of misidentifying people with darker skin tones. A study by Stanford University Human-Centered AI Institute where contrastive language-image pretraining (CLIP) misclassified black people as nonhumans on multiple occasions, one of the most pertinent AI bias examples.

Here are some of the reasons why AI shows bias:

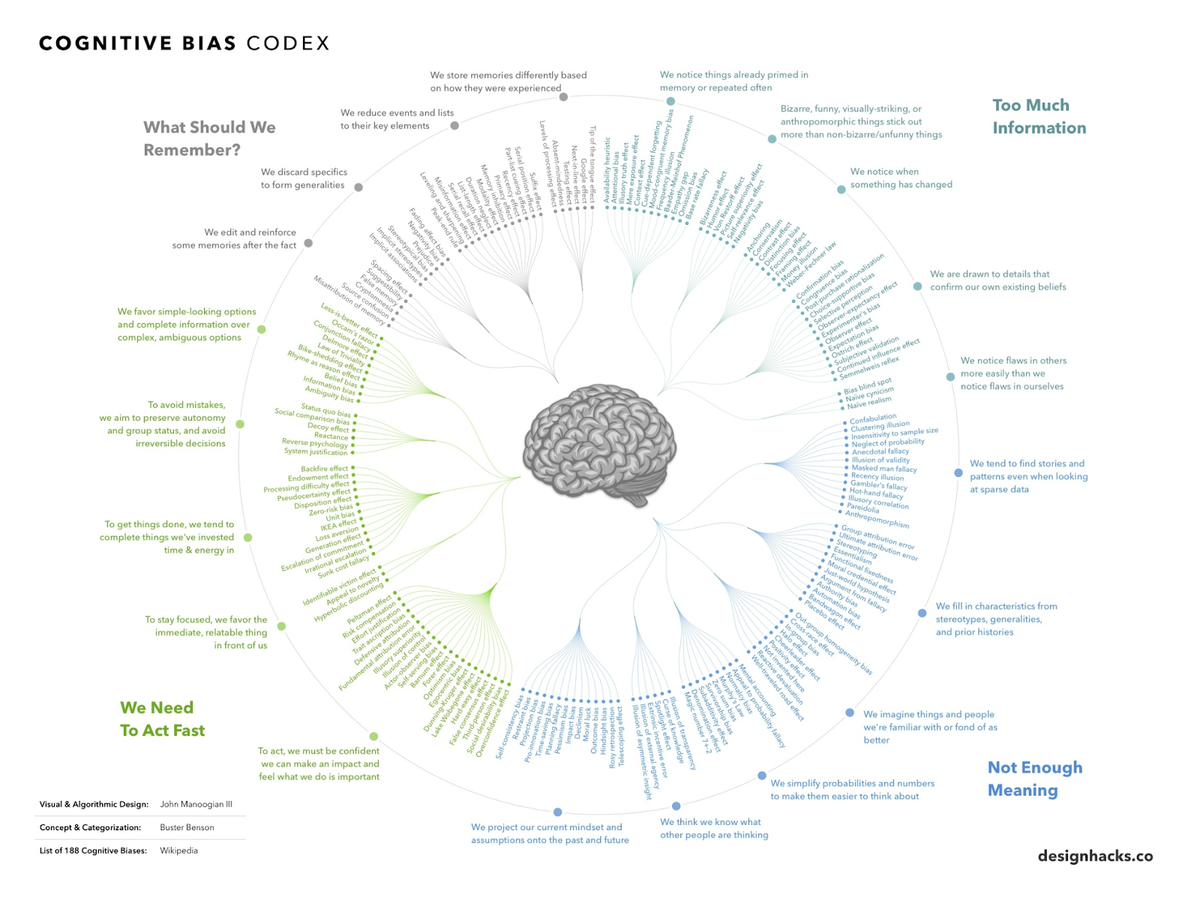

The primary reason why AI shows bias is because humans insert their own biases when creating these models. Psychologists claim that there are more than 188 cognitive biases, which eventually show up in algorithms created by humans one way or another.

For instance, some people mistakenly think that not giving an AI model access to a protected class like race will make it less biased. However, it can still rely on other non-protected factors, such as geographical data, resulting in bias. This phenomenon is known as proxy discrimination, as a proxy is in place of a class already eliminated from the training dataset.

Low-quality training data is another reason that results in AI bias. After all, it includes human decisions and might echo persisting inequities. For instance, if a company in a male-dominated industry trains its recruitment AI on previous employee data, the algorithm is bound to discriminate against potential female candidates.

Similarly, language learning AI that takes training data from social media and news reports will start showing language biases and already-prevalent prejudices. That has happened to Google Translate, which assigns gender to situations when translating from gender-neutral languages like Hungarian. For instance, it might translate phrases from gender-neutral languages to "he works out" and "she cooks,” reinforcing gender stereotypes.

Underrepresentation and overrepresentation are major issues when feeding training data to an AI model. Data scientists could exclude valuable entries that lead to the underrepresentation of certain groups. Similarly, oversampling specific data could result in overrepresenting some groups or factors. For instance, crimes committed in locales with more police patrols could be overrepresented in crime data.

Most people don't realize that interaction of AI models with real-world users can reinforce technology bias. For instance, a credit card company could use existing social biases to target less educated customers with higher interest rates. They click on these ads without knowing that other social groups offer much better deals, thus reinforcing the algorithmic bias.

AI bias comes in various shapes and forms. Although the reasons behind it can be different, from bad training data to the environment, the result is always the same: algorithm discrimination against a particular group or giving another group an advantage. The most prominent types of AI bias are:

Let's discuss all of these biases in detail below and understand how they affect algorithmic output.

One of the most common types of AI bias is historical bias, where an AI system makes decisions based on outdated data. The best example here is Amazon's hiring innovation back in 2014. Amazon wanted to build an automated hiring system to filter well-qualified candidates and invite them for interviews.

Since Amazon's workforce primarily consisted of men, the AI system started discriminating against women and preferred men over them, even if the latter were not as qualified. That was because the algorithm was using data from the previous ten years. The observation that the workforce mainly consisted of males was correct, but the conclusion that men are more capable for the job was erroneous and biased.

Amazon had to scrap the project after receiving multiple complaints in 2015. This example shows that AI trained on older data can propagate algorithmic bias to undermine a specific group.

AI algorithms have achieved considerable progress in becoming independent but still rely heavily on human intervention for data labeling. Unlabeled data is useless for AI algorithms because it provides no context that leads to erroneous decision-making.

Most people are already familiar with labeled data. One of the most common people examples is when a website asks you to prove you're human by showing you a picture with traffic lights divided into squares. To prove it, you select the squares containing traffic lights. While humans can easily identify traffic lights in the pictures, AI cannot because it doesn't have enough labeled data.

The problem with not having enough labeled AI data is knowledge gaps, which result in hallucination. Even large language models (LLMs) like ChatGPT have regularly depicted this phenomenon. Hallucinations mean that when an AI model doesn't have relevant data, it fills in the details with anything it can find. LLMs can even fabricate statistics and facts when they cannot find anything relevant.

Sampling AI bias shares some similarities with historical bias by overrepresented or underrepresented certain groups, but the source of the problem is different. It usually occurs in the initial training phases when the AI model receives erroneous data that distorts its understanding of reality.

One pertinent example is the development of speech recognition technology by various companies. They used audiobooks to train their programs, which older white men mostly voiced. As a result, these programs were more accurate when translating English than other languages.

Moreover, they offered better responses to male & white speakers than female & non-white speakers. This example shows how initial training data skewed the outlook of an algorithm for a long time.

As the name suggests, this AI bias results from aggregating training data from various sources. When developers do that, the algorithm makes biased decisions because of the existing bias in the training data.

A fitting example here is the salaries of various professions. Usually, as you climb up the experience ladder, your salary keeps increasing. This is true for all industries except sports because athletes usually earn the most early in their careers. As they age, their salaries decrease, and they move to comparatively non-competitive leagues and teams. However, if you train an AI program on aggregated salary data, it will discriminate against athletes.

Using aggregated data for training AI can paint a twisted real-world picture. As a result, employers can be unfair towards a particular group because of the decisions taken by biased software.

Confirmation AI bias is one of the most common biases you may have heard about. It is a natural tendency to trust the information confirming our strong beliefs or biases, clouding our judgment. Many people fall prey to it when analyzing social or political issues.

This AI bias can regularly emerge in the healthcare industry. For instance, a doctor might not trust AI and misdiagnose the patient because AI's diagnosis doesn't correspond to their existing knowledge about a disease. Similarly, biased algorithms might misdiagnose the patient based on training data, not the patient's unique symptoms.

Evaluation AI bias can also be called a scaling bias because it usually results in AI taking a small sample and generalizing it. For instance, if you create a voter analysis algorithm that correctly predicts local election results, can it also predict results on a national level? The answer is a resounding no because voting patterns on a national level bring way more variability than in a small district.

All AI models are trained when more data is added. However, if that data is based on a small fraction of the environment, it cannot make correct predictions about the whole environment. After all, AI cannot really perform well in unfamiliar settings.

Data cannibalism is also called 'model collapse' in AI development. It is slightly different from the other biases, as it strictly concerns the AI models themselves rather than the outside environment provided by humans. As more AI algorithms occupy the internet, newer models will be trained based on existing AI models, creating an internal bias.

As a result, these models would rely less and less on human-provided data and more on their own data. Their content would start looking more and more robotic, devoid of facts.

Since AI's influence on crucial decision-making will only increase, it's critical to discuss how to reduce bias in machine learning. Fortunately, some practical ways can help with it.

The real world has many cognitive biases in decision-making, so the data extracted from it isn't the most trustworthy. That's not to say that the real-world data is completely useless. The best way to deal with this issue is to use a mix of artificial and real-world data to smoothen out AI bias. For instance, synthetic data from a generative adversarial network (GAN) can go a long way in creating more balanced training datasets.

AI models rely on older datasets, often dating back years and decades. It creates a significant problem: what was considered fair back then might not be considered fair today. Similarly, the datasets companies are using today might become obsolete in the coming years.

Companies should keep evaluating the training datasets and regularly incorporate changes. One can detect potential bias by using automated checks, including data anonymization and sentimental analysis.

AI algorithms are based on human thought, so people designing them significantly impact their behavior. That's why having a diverse team with a wide range of experience alongside different races and genders is crucial. Such a team is better equipped to identify potential biases in data and cull them before they're reinforced when that AI model is in use. Moreover, it helps organizations create AI systems that understand different populations better and incorporate various perspectives.

Transparency is the key to reducing AI bias and winning customers' confidence. AI systems are incredibly complex and becoming more intricate with the passing of time. Identifying biases in an AI system is a daunting task, which is why organizations should be as transparent as possible about the decision-making process of these systems. It helps users become more comfortable with an AI system and creates mutual trust.

Ethical Model Frameworks are an excellent way of ensuring fairness, accountability, security, and transparency in AI systems. One example is the Artificial Intelligence Ethics Framework for the Intelligence Community developed by the U.S. intelligence community to create ethical AI. The interest of a prestigious intelligence community in this area highlights the importance of making fair and ethical AI systems and reducing AI bias.

More and more businesses want to take advantage of AI and improve the customer experience. If you're one of them, Aidbase can help you create quality AI chatbot customer service that can provide users with an excellent environment.

Creating an account with us means you'll have AI chatbots that can deliver better results than humans 90% of the time. You can train these chatbots on your data and fill the training gaps gradually as more data comes in. Moreover, these chatbots have fully customizable tones, colors, logos, branding, and whatnot.

So, contact us today and hop on to world-class AI customer service.